It’s one of those problems that every IT pro, sysadmin, or power user dreads. Not a blue screen, not a server-down emergency, but a small, persistent, and maddening “ghost in the machine.”

For me, it was a flashing cursor.

For about five minutes every few hours, my mouse cursor in Windows 11 would flash the “waiting” or “processing” icon. Every. Single. Second.

As a problem, it was just annoying. But as a puzzle, it was infuriating. My system was fully up-to-date, drivers were current (or how I liked them), and resources were normal. Task Manager showed… nothing. No CPU spikes, no disk thrashing, no memory leaks.

I work in IT. These sort of things shouldn’t happen to me!

Who is going to help me!?? I am THE HELPDESK!!

(or at least passed by that title to get to my current position.)

Why, oh why is this happening to me!

This is a user problem, not something that I should have to diagnose and solve on …my own device…?

I could have spent the next four hours solving it the old-fashioned way. Instead, I did it in under 30 minutes by using an AI as my troubleshooting co-pilot. This is the story of how that collaboration worked, and why it’s a game-changer for IT pros – at least in some situations.

The Problem: A Ghost in the Machine

My first instinct was to use the process of elimination. The “human” part of the troubleshooting.

- Was it my screenshot tool,

picpick.exe? I killed the process. Nope. - Was it a stuck

powershellorwt.exescript? Killed those too. No change. - Was it a browser tab? Or browser process? Or Windows App?

Restarted Brave.

Restarted that long running google updater/chrome process,

Restarted EdgeWebView2 (which all modern Windows Apps use). Still flashing. - Was it the classic:

explorer.exe? Restarted it. Nothing.

I was 15 minutes in, and all I had done was prove what wasn’t the problem. Not necessarily a bad thing.

My next step was to break out the heavy-duty logging tools, dig through a million lines of text, and resign myself to a long, tedious hunt.

This is the “grunt work” of IT – the part of the job I can do, but don’t exactly enjoy.

The “AI Nudge”: Asking for a Second Pair of Eyes

Instead of diving into that digital haystack of logs, I took a different approach. I opened an AI assistant.

I didn’t ask it to “fix my PC.” That’s not how this works. I treated it like a junior sysadmin or a “second pair of eyes.” I explained the symptoms and what I had already tried.

My prompt was something like:

"I've got an intermittent flashing 'waiting' cursor on Windows 11. It's not a high-CPU process; Task Manager is clean. I've already restarted explorer and other common apps. I suspect it's a process starting and stopping too fast to see. What's the best way to catch it, which logs should we look at first, or which tools should we spin up?"The AI’s response was the “force multiplier.”

It didn’t give me a magic answer. It gave me a precise, actionable workflow. It validated my theory (a fast process loop) and recommended the perfect tool and the exact filter to find it. It basically said, “You’re right. Now, go here, use this tool, and apply this specific filter to see only newly created processes.”

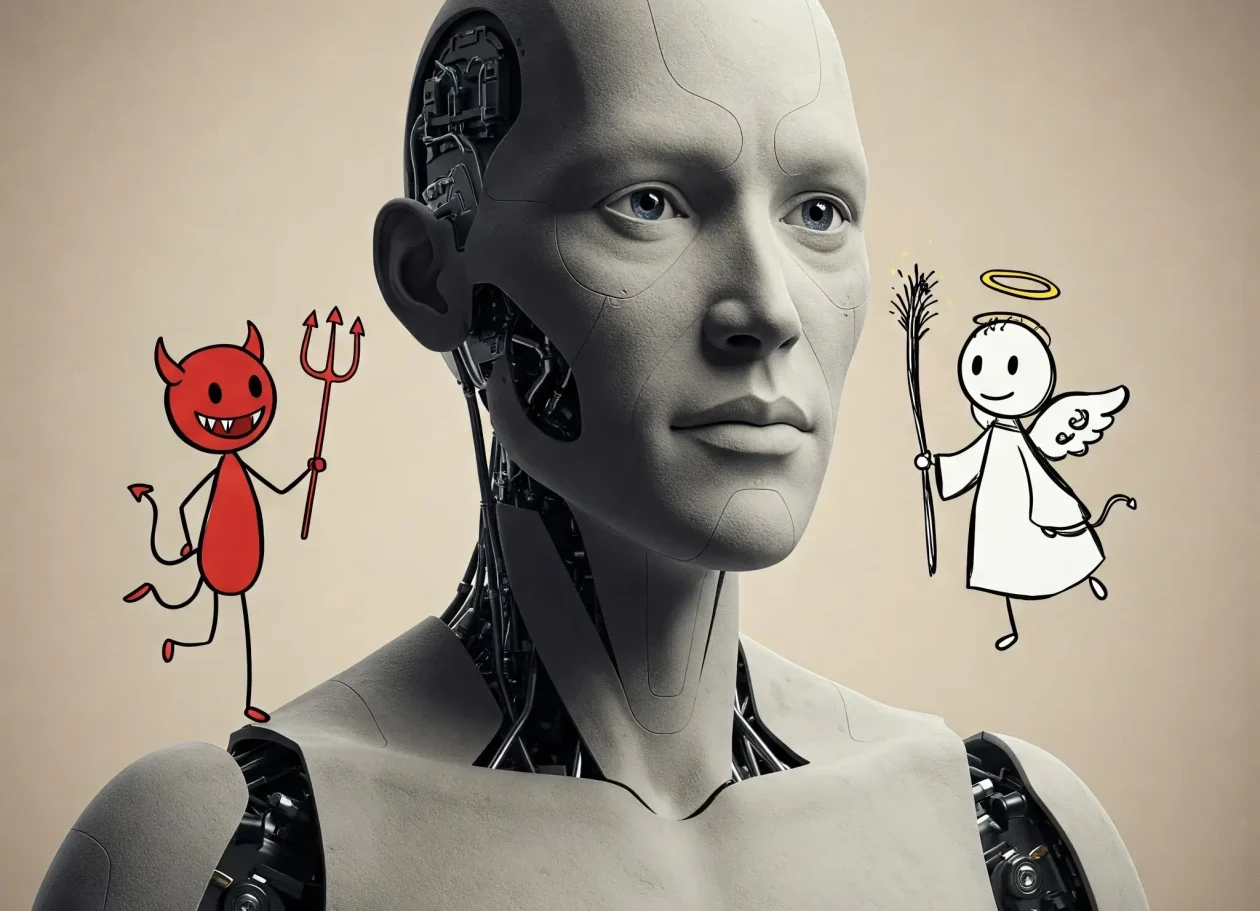

This is the power of human-AI collaboration. The AI didn’t replace my skill; it augmented it. It saved me 30 minutes of searching through old notes, Googling, and trying to remember the exact syntax for a tool I use maybe six times a year.

Collaboration: From Digital Haystack to Prime Suspect

With the AI’s “nudge,” I had my prime suspect in less than 60 seconds.

I ran the tool with the filter, and what was previously an overwhelming flood of data became a crystal-clear, one-line-per-second log of the exact same process being created and destroyed.

I’m writing a full, technical step-by-step tutorial on this exact method (at some point!), but the short version is: the filter worked perfectly.

The process name immediately told me it was a system component related to network connections. This is where I, the human, took back control.

- AI Clue: It’s a network process.

- Human Hunch: If the client is spamming a network request, the server must be rejecting it.

I immediately logged into my network-attached storage (NAS) / file server and opened the access logs.

Bingo.

A wall of red: “Failed to log in.” My PC’s IP address, every single second, trying and failing to authenticate.

The “Aha!” Moment and the 5-Minute Fix

I now had two pieces of the puzzle: a network process on my PC failing in a loop, and a file server rejecting its login – however, upon testing I could still access the file share? Nothing seemed to be blocked? It is all working as expected! (other than my BLINKING CUIRSOR!)

I could have figured it out from here, but I turned back to my AI co-pilot for the “why.” I fed it the two new clues:

"I've got this process spamming, and my server is blocking it but I still have access? What is going on here and what process could be causing this if everything works as it should?"My AI buddy instantly provided the obscure, “textbook” knowledge. It explained a specific, built-in Windows fallback behaviour. When a primary connection to a network share (via the normal SMB protocol) fails, Windows will sometimes try to “help” by falling back to a different protocol (WebDAV), creating this exact kind of rapid-fire loop.

The root cause was that I had updated my file server’s software a few days ago, and my PC was still trying to use an old, expired, cached credential – part of it updated, the other (seldom used) web browser access fall-back element – had not caught up. And according to my AI, once started the process was ‘handed off‘ to the ‘system’ to complete, thus is not tied to a browser and is why a browser restart or closure had not cleared the issue.

The fix was laughably simple.

- I went to Windows Credential Manager.

- I found the saved credential for my file server.

- I clicked Remove.

- I browsed to the server again and re-typed my password.

The flashing stopped. Instantly. The problem was solved.

AI Isn’t My Replacement, It’s My Co-Pilot

What would have been a long, annoying afternoon of troubleshooting was over before my coffee got cold.

AI didn’t solve the problem. I solved the problem.

But AI acted as the perfect co-pilot. It streamlined the most tedious parts of the process, provided the “second opinion” to keep me on track, and supplied the deep, “encyclopedic” knowledge when I needed it.

It let me skip the grunt work and focus on the smart work – the analysis, the hunch, and the fix.

This is the future of IT. It’s not about being replaced by AI;

it’s about being 10x more effective by using it.

If you’re curious about the specific tools and filters I used to catch that rogue process, keep an eye out for my next post: “[SOLVED] Beyond Task Manager: Simple Guide to Finding Process Loops with Process Explorer and Procmon.” – when I eventually post it!

![The AGI Threat: Are We Ignoring AI’s Existential Risks? [opinion]](https://cannotdisplay.com/wp-content/uploads/2025/03/The-AGI-Threat-Are-We-Ignoring-AIs-Existential-Risks-opinion-1260x1260.webp)

![Unlock AI Power for Your SMB: Microsoft Copilot Success Kit – Security & Actionable Steps [solution]](https://cannotdisplay.com/wp-content/uploads/2025/03/Boost-Your-SMB-with-AI-Microsoft-Copilot-SMB-Success-Kit-Actionable-Guide-Security-Focus-e1741969624103.jpg)

![Ditch the OpenAI Bill: How to Use Free, Responsive AI with GaiaNet and ElizaOS [Solved]](https://cannotdisplay.com/wp-content/uploads/2025/01/ELizaOS-GaiaNet-e1736085694639.jpg)